A recent Economist article got me thinking about the history of language technologies:

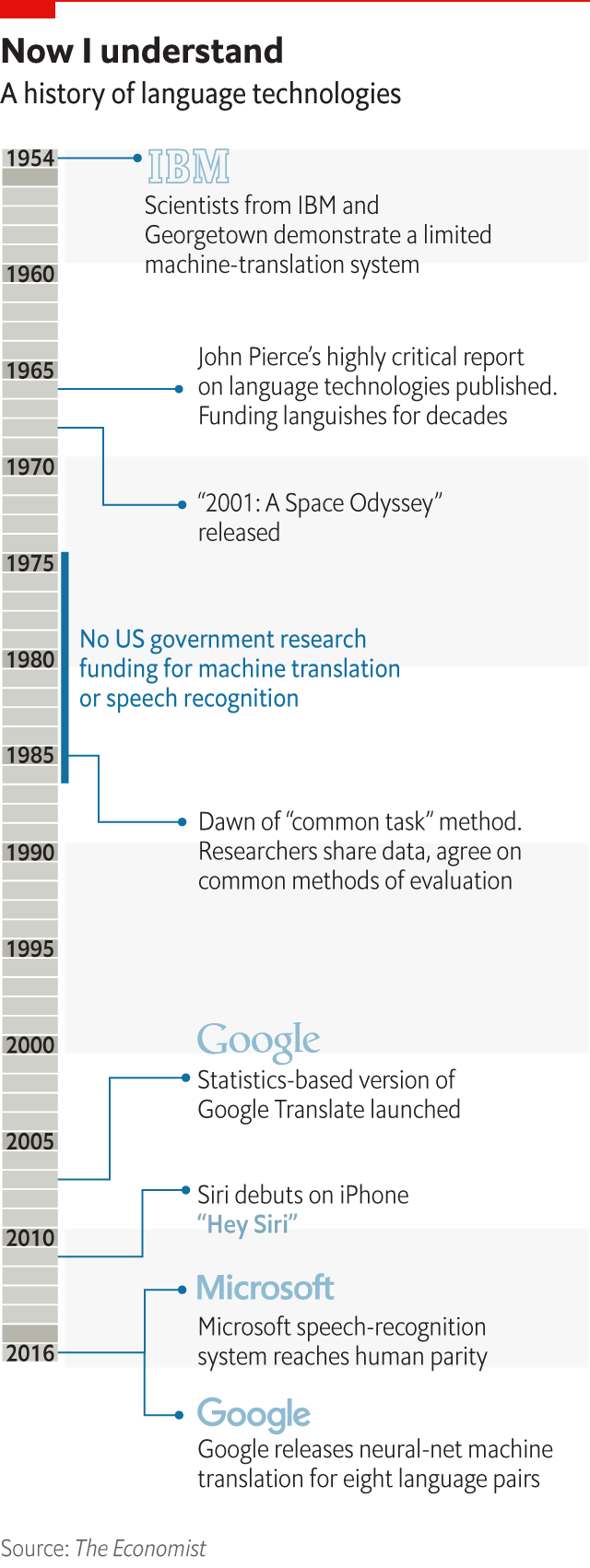

The article give a good overview of language technologies like Machine Translation and Speech Recognition, with more depth and less exaggerations about recent advances in AI than many similar publications. They give this too-late timeline, however, starting in 1954:

The article, and its wrong date, reminded me of a recent conversation I had with Ron Kaplan about the early days of Artificial Intelligence. Ron is currently the Chief Scientist in Amazon’s search division, A9, and he is also responsible for my day job! When he heard I was looking for work last year, he brought me into the Amazon Web Services (AWS) division. AWS is the largest cloud services provider, and I now run product for Natural Language Processing and Machine Translation there, building language technologies for millions of people and organizations world-wide.

I first met Ron when we were both at a search startup called Powerset about 10 years ago, and again when I returned to grad school at Stanford, where he participated in a class on the history of Computational Linguistics, run by two other current/future legendary language technologists, Martin Kay and Dan Jurafsky. When we caught up recently, I brought up a story that had stuck with me since that class: Ron had helped build a commercial language technology product that predated computers.

Their technology was a spell-checker for an electronic typewriter. You inserted their device into the cable between the keyboard and the ribbon. The device had a dictionary of words and possible prefixes & suffixes (“-ing”, “-ed”, etc) and it would ‘ping’ if it thought you misspelled a word. The business model was that it would save ribbons as less words needed to be retyped. It wasn’t in the market for long before electronic typewriters got replaced by computers with word-processors, and software spell-checkers replaced their hardware spell-checker.

I still love the idea of hardware-based language technologies. Today, even the devices that seem like hardware-based devices, like personal home assistants, are generic hardware technologies where most of the specialized processing is in the software.

Going back even further, I thought, were there analogue natural language processing technologies? Before the transistor revolution, did people try to build language technology products on machines powered by vacuum tubes?

Yes, people made language technologies out of vacuum tubes!

Introducing, the Voder:

Source: www.youtube.com/watch?v=0rAyrmm7vv0

At the 1939 World Fair, Bell Telephone Laboratory’s Voder was revealed. It was invented by Homer Dudley, which is the most ‘1939’ name you can think of. Operated by a specialized keyboard, it also made it to Silicon Valley the same year, with demonstrations at the Golden Gate International Exposition on Treasure Island in the San Francisco Bay Area, as part of celebrations for engineering advances that included the recently constructed Golden Gate Bridge. I’m just going to leave this sentence to sit with you for a while:

- Homer Dudley took his vacuum-tube speech synthesizer to Treasure Island to celebrate the Golden Gate Bridge

I like to imagine Homer Dudley’s long sea journey to the San Francisco Bay area with his Voder. Every day, he would stand on the deck, staring out to where the waves meet the horizon, thinking about how to create technology to better mankind. Every evening, he would descend into the cargo hold to where the Voder was stored, pat it reassuringly, and make sure that the straps keeping it secure were tight (but not uncomfortably tight). I call this journey Voder’s Odyssey.

Alas, the Voder was not widely sold. It is difficult to imagine the use cases. It could help speaking impaired people, but many conditions with speaking-impairment also have motor-control impairment, which would make transporting and operating the Voder very difficult.

Fortunately, Homer Dudley succeeded elsewhere. The knowledge that went into the Voder also went into compressing people’s speech in early telephone conversations. In addition, he worked with Alan Turing on encryption during the second world war. Finally, his work on voice synthesizers did lead to technologies that helped speaking impaired individuals, most famously Stephen Hawking. We should all aspire to be Homer Dudleys.

As an interesting aside, Stephen Hawking has chosen to maintain using his now dated sounding synthesized voice, as it has become so closely associated with him. It is a fascinating early example of how our electronic extensions will become important parts of our self identity, which will only grow as language technologies become more ubiquitous.

In terms of the Economist article, then? It looks like they started their timeline at least 20 years too late, but I still recommend reading it. It gives a great overview of the progression of the field since 1954, including a topic that is dear to my heart: extending language technologies to less widely spoken languages.

Robert Munro

February, 2017

PS: Perhaps there was some language technology product earlier than 1939 that I don’t yet know about? Drop me a line: I’d love to hear about it!