“Processing short-message communications in low-resource languages”

I completed my PhD at Stanford University earlier this year. It is published by Stanford with Creative Commons Attribution license:

The work explores the nature of the variation inherent to short message communications in the majority of the world’s languages, and the extent to which modeling this variation can improve natural language processing systems. It is safe to say that text messaging is used by a more linguistically diverse set of people than any prior digital communication technology. Many languages that were only ever spoken languages are now being written for the first time in short bursts of one or two sentences.

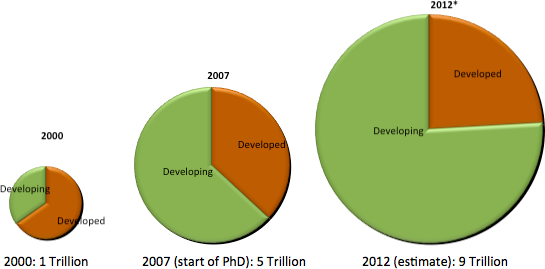

The shift to a diverse texting world. The size of the graphics indicate the number of text messages sent, and the segments are the number of cellphone subscribers defined by the International Telecommunication Union (ITU) as being in the ‘developing’ or ‘developed’ world.

The spread of technology has not been matched by a similar increase in the capacity to process and understand this information. Ten years ago, to hear most low resource languages meant days of travel. Today, most of the world’s 7,000 languages can be found on the other end of your phone, but we have no speech-recognition, machine-translation, search-technologies or even spam-filters for 99% of them. Natural language processing is one way to leverage our limited resources across large amounts of data and the work here shows that it is possible to build accurate systems for most languages, despite the ubiquitous written variation and lack of existing resources. This should be encouraging for anyone looking to leverage digital technologies to support linguistically diverse populations, but it opens as many questions as it solves. In a way, putting a phone in the hands of everybody on the planet is the easy part. Understanding everybody is going to be more complicated.

The objective of my dissertation was to explore the nature of the variation inherent to short message communications in the majority of the world’s languages, and the extent to which modeling this variation can improve natural language processing systems. Text messaging may be the most linguistically diverse form of digital communication that has ever existed, but it is almost completely unstudied. Three sets of short messages were studied, in the Haitian Kreyol, Chichewa, and Urdu languages.

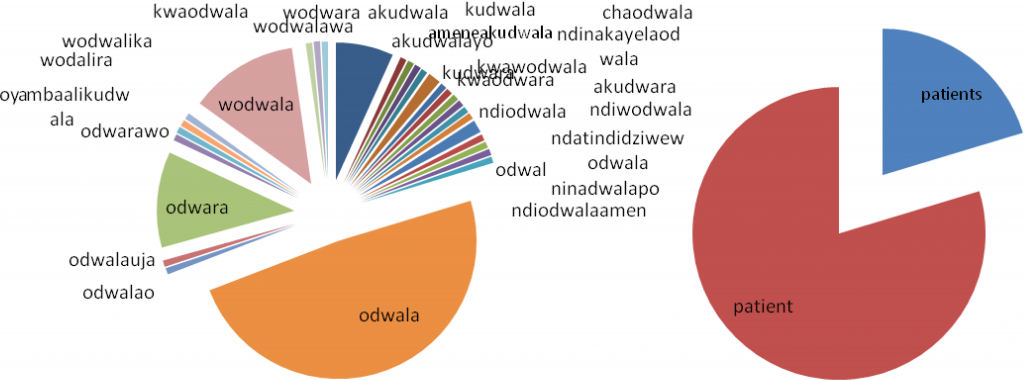

The complication for natural language processing: the number of spellings for 'odwala' ('patient') in text messages between Chichewa-speaking health workers, compared to the English translations of those messages.

The dissertation first looked at automated methods for modeling the subword variation, finding that language independent methods can perform as accurately as language specific methods, indicating a broad deployment potential. Turning to categorization, it was shown that by generalizing across the spelling variations, we can, for example, implement classification systems that can more accurately distinguish emergency messages from those that are less time critical, even when incoming messages contain a large number of previously unknown spellings of words. Looking across languages, the words that vary the least in translation are named entities, meaning that it is possible to leverage loosely aligned translations to automatically extract the names of people, places and organizations. Taken together, it is hoped that the results will lead to more accurate natural language processing systems for low-resource languages and, in turn, lead to greater services for their speakers. I will expand on each of these a little below. The full accounts can be found in the dissertation itself, with much of it also in the publications arising from this work.

Modeling subword variation

This part of my dissertation evaluated methods for modeling subword variation. It looked at segmentation strategies and compared language specific and language independent methods to distinguish stems from affixes, such as distinguishing ‘go’ and ‘-ing’ in the English verb ‘going’. It then compared normalization strategies for spelling alternations that arise from phonological or orthographic variation, such as the `recognize’ and `recognise’ variants in English or more-or-less phonetic spellings like the ‘z’ in ‘cats and dogz.’ The language specific segmentation methods were created from the Sam Mchombo’s Syntax of Chichewa. The language independent methods for segmentation built on Sharon Goldwater, Thomas L. Griffiths, Mark Johnson’s A Bayesian framework for word segmentation: Exploring the effects of context, adapted to segmenting word-internal morpheme boundaries. The normalization methods use language specific methods created from Steven Paas’ English-Chichewa/Chinyanja dictionary. They are compared to normalization methods that are applicable to any language utilizing Roman script, and to language independent noise-reduction algorithms.

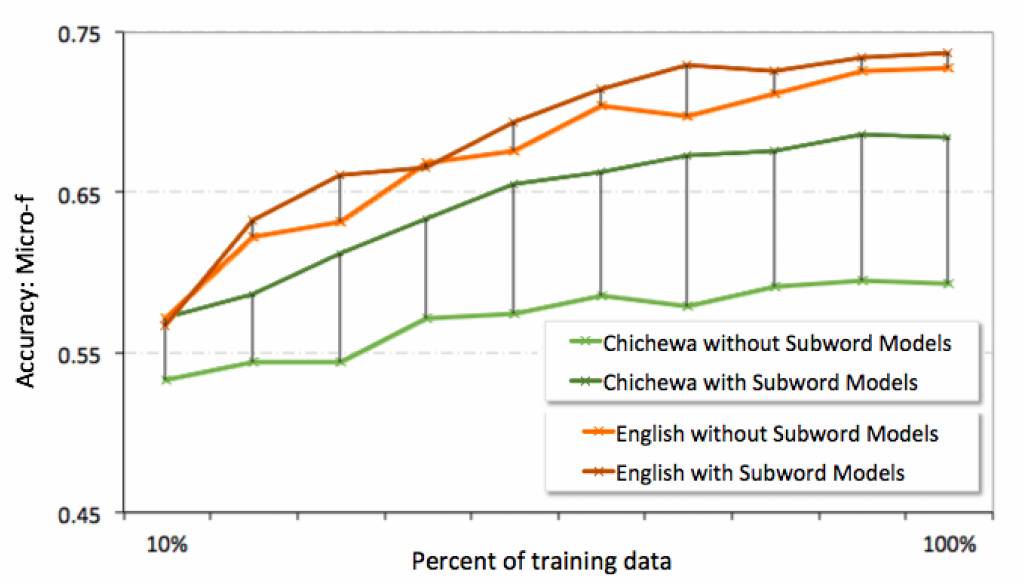

For morphological and normalization strategies alike, the language independent methods performed almost as accurately as the language specific methods, indicating a broad deployment potential. Overall, these results are promising, finding that language independent unsupervised methods often perform as well as language specific hand-crafted methods. When performance is evaluated in terms of deployment within a supervised classification system to identify medical labels like ‘patient-related’ and ‘malaria’ the average gain in accuracy when introducing subword models was F=0.206, with an error reduction of up to 63.8% for specific labels. This indicates that while subword variation is a persistent problem in natural language processing, especially with the prevalence of word-as-feature systems, this variation can itself be modeled in a robust manner that can be deployed with few or no prior resources for a given language. In conclusion, while subword variation is a widespread feature of language, it is possible to model this variation in robust, language independent ways.

Comparing subword models for Chichewa and English, showing why they are needed for Chichewa, with more cross-linguistically typical spelling variation.

Classification of short message communications

This part of my research extended the work on broad-coverage subword modeling to classification. Document classification is a very diverse field, but the literature review showed that past research into the classification of short messages is relatively rare, despite the prevalence of text messaging as a global form of communication. Several aspects of the classification process were investigated here, each shedding light on subword variation, cross-linguistic applicability, or the potential to actually deploy a system.

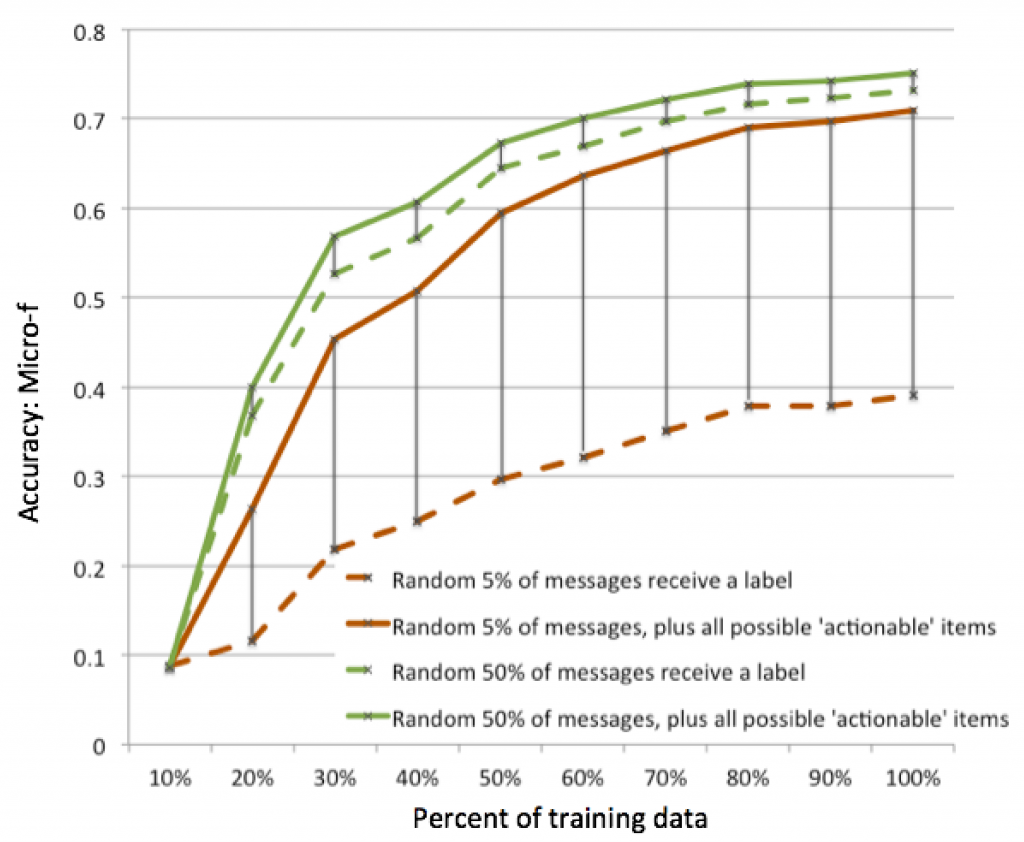

Active-learning to prioritize time-critical messages: by deliberately sampling the time-critical 'actionable' messages, the system can incrementally prioritize new messages with near the same accuracy with only 1/10th the (manual) workforce.

This is especially important for the information used here, which was taken from Mission 4636, an initiative that I coordinated following the 2010 earthquake in Haiti, which processed emergency text messages from the Haitian population using crowdsourced workers to translate and geolocate the information for the responders. My biggest fear when running this as a crowdsourced effort was that the volume would overwhelm the workforce, delaying the processing of important messages. This proved not to be the case for Mission 4636, with the (predominantly Haitian) workers and volunteers processing the messages with a median turnaround of only 5 minutes. It is easy to imagine a situation with an even higher volume of messages where human-processing could not keep pace, with even an hour’s delay meaning life-or-death. Information coming out of crisis-affected regions is only likely to increase in the future, so these kinds of technologies will be key to information processing.

Domain dependence, a related deployment hurdle, was also investigated here, comparing cross-domain accuracy when applying text message-trained data to Twitter and vice-versa. Despite being short message systems about the same events, the cross-domain accuracy is poor, supporting an analysis about the difference in the usage of the platforms. However, it is also shown that some of the accuracy can be reclaimed by modeling the prior probability of labels per message-source in certain contexts.

Information Extraction

The final part of the dissertation looked at information extraction, with a particular focus on Named Entity Recognition. The research leverages the observation that the names of people, locations and organizations are the least likely to change form across translations, drawing on this observation to build systems that can accurately identify instances of named entities in loosely aligned translations of short message communications. Named Entity Recognition is the cornerstone of a number of critical Information Extraction tasks. In actual deployment scenarios, it could support the geo-location of events and the identification of information about missing people, two functions that were performed manually on this data. This approach, novel for Named Entity Recognition, has three steps.

First, candidate named entities are generated from the messages and their translations (the parallel texts). It is found that the best method to do this is to calculate the local deviation in normalized edit distance. For all cross-language pairs of messages, the word/phrase pairs across the languages that are the most similar according to edit-distance are extracted, and the deviation is calculated relative to the average similarity of all words/phrases across the translation. In other words, this step attempts to find highly similar phrases across the languages in translations that are otherwise very different. This method alone gives a little over 60% accuracy for both languages.

In the second step, the set of candidate named entity seeds are used to bootstrap predictive models. The context, subword, word-shape and alignment features of the entity candidates are used in a model that is applied to all candidate pairs, predicting the existence of named entities across all translated messages. This raises the accuracy to F=0.781 for Kreyol and F=0.840 for English.

As there are candidate alignments between the languages, it is also possible to jointly learn to identify named entities in the second step by leveraging the context and word-shape features in the parallel text, by extending the feature space into both languages across the alignment predictions. In other words, the feature-space can be extended across both languages for the aligned candidate entity. This raises the accuracy to F=0.846 for Kreyol and F=0.861 for English.

In the third and final step, a supervised model is trained on existing annotated data in English, the high-resource language. This model is used to tag the English translations of the messages, which in turn is leveraged across the candidate alignments of entities. This raises the accuracy to F=0.904 for both Kreyol and English. The figures for Kreyol are approaching accuracies that would be competitive with purely supervised approaches trained on tens of thousands of examples.

It is concluded that this novel approach to Named Entity Recognition is a promising new direction in information extraction for low-resource languages. Most low-resource languages will never have large manually annotated training corpora for Named Entity Recognition, but many will have a few thousand loosely aligned translated sentences, which might allow a system to perform comparably, if not better.

Concluding remarks

While the analysis of the data sheds enough light on the nature of short message communications to undertake the work presented here, there is still much that remains unknown. Which languages are currently being used to send text messages? We simply don’t know. There may be dozens or even hundreds of languages that are being written for the first time, in short bursts of one or two sentences. If text messaging really is the most linguistically diverse form of written communication that has ever existed, then there is still much to learn.

Rob Munro, August 2012