Tracking Epidemics with Natural Language Processing and Crowdsourcing

The world’s greatest loss of life is due to infectious diseases, and yet people are often surprised to learn that no one is tracking all the world’s outbreaks. The first indication of a new outbreak is often in unstructured data (natural language) and reported openly in traditional or social media as a new ‘flu-like’ or ‘malaria-like’ illness weeks or months before the new pathogen is eventually isolated.

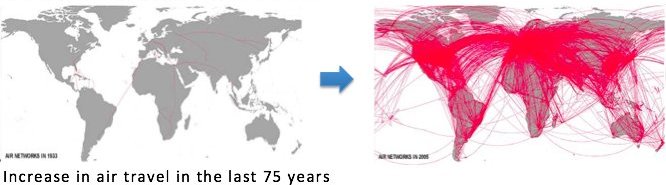

Since the Spanish Flu killed more people than the first World War, we have have connected the world while only eradicating Smallpox.

In 2011, I was the Chief Technology Officer of EpidemicIQ, an initiative that aimed to track all the world’s disease outbreaks. The result was an order of magnitude larger and more sophisticated than any other disease-tracking system. It made me appreciate how unready the world is to respond to epidemics.

I have discussed and presented this work in a number of circles. This post builds on feedback from two primary sources. The first is a paper presented at a workshop on crowdsourcing and artificial intelligence last year:

- Robert Munro, Lucky Gunasekara, Stephanie Nevins, Lalith Polepeddi and Evan Rosen. 2012. Tracking Epidemics with Natural Language Processing and Crowdsourcing. Spring Symposium for Association for the Advancement of Artificial Intelligence (AAAI), Stanford. http://www.robertmunro.com/research/munro12epidemics.pdf

And an address to the UN General Assembly on “Big Data and Global Development”.

I am regularly recognized in ‘big data’ circles because of this video (there’s a cleaner version somewhere that I’ll add if I find it). It was the first presentation of its kind to the UN General Assembly an our work ended up in a number of publications like Time Magazine and the New York Times. I also received the ‘Data Hero’ award for social good at the first Data Science Summit, and a film-crew wanted to turn our project into a reality show (we politely declined). For the presentation to the General Assembly, other than the two presenters I was the only interview that made the cut, so am grateful for the coverage. But like so many things that get media attention, the truth diverges from the popular narrative.

Even if you do not work in epidemiology, I’m sure it is obvious to you that there is great responsibility in managing information about at-risk individuals. This is clear in the example I give in the video:

- “With a recent outbreak of Ebola in Uganda, we were tracking it a little earlier than other health organizations, but more importantly we were able to pull in much richer data from a larger number of sources, so we knew not just how many people were infected, but what kind of transport they took when they went from their village to the hospital in the nearest main town.” Robert Munro

Unfortunately, the interview cuts me off mid-utterance at this point. I know Uganda fairly well: I traveled across Uganda and neighboring countries by bicycle and I almost ended up running a disaster preparedness program there last year. I keep in touch with many of the people I met while cycling. I hear stories about additions to their families, changes in politics, and occasionally stories about killing people who are demons and vampires. Old beliefs survive strong in some areas, and we are not changing patterns of discrimination as quickly as we are connecting people.

For the girl who contracted Ebola, her village was named in reports and this was a region where victims of diseases are often vilified and sometimes killed. She would have likely been the only person from her village who was rushed to a hospital at that time (and more likely the only girl of her age-bracket). It would have been simple for everyone from her village to immediately make the connection. At EpidemicIQ decided we would not want to publish this information, but many other health organizations did. Her diagnosis was ultimately incorrect, which doesn’t really affect the anonymization issue, but it makes any identification/vilification even more disturbing.

We were information managers and health professionals, not lawyers, and the international aspect no doubt complicates things. I assume that the health organizations who did publicize this acted within the law. For us, this wasn’t enough. If it was reported in a health journal 5 years later? That might be ok. But as a real-time report it was clearly unethical to repeat this information somewhere that would make it more accessible or be perceived as more authoritative. I doubt that other health organizations who re-published this information did this in malice – it was one piece of information among many – but it highlights the problem.

Weigend acknowledges the potential in the video:

- “we have invented something powerful. As with anything powerful it is not black or white. It is our responsibility to communicate trade-offs, so we can use the data responsibly preserve people’s rights” Weigend

The problem is that they did not communicate the trade-offs that I laid out, cutting me off an omitting my conclusion that we should be increasing the privacy. The address to the UN General Assembly ends there, concluding the opposite of what I had actually told them:

- “There is a public good nature to it and you only get to use the advantages which we have created here if people actually share their data” Weigend

I do not believe this to be true. This rewrite of my conclusion was without my permission and consent and it promotes a practice that I oppose: more open with data about at-risk individuals.

Actionable private data

The problem comes from open-data-idealists. They believe that so long as we can share information at scale, they believe that the problem is treated as solved. This is misleading and dangerous.

I will give the benefit of the doubt to Peter Hirshberg and Andreas Weigend. They had 100s of data sources and many interviews to choose from, and perhaps my story became separated from my own conclusions without them noticing. Regardless, they are still liable for perpetuating dangerous behaviors in information management.

While my own conclusions about my work were cut out, other interpretations were permitted. In the presentation to UN General Assembly, the real-time data for an e-coli outbreak in Germany are real, but the graphics are invented. They were a post-hoc visualization of the data created specifically for their presentation, and do not represent any part of EpidemicIQ’s internal dashboard. Sped-up, the visualization is compelling, but at real-time who is going to watch a heat-map (not adjusted for population density) for data coming it at about two reports an hour?

At EpidemicIQ, we never really found a solution for how to present information for actionable response to epidemic tracking. That’s partially because the people at the front line represent the full breadth and complexity of humanity:

Like every other type of organism, the majority of the world’s pathogens come from the band of land in the tropics. 90% of our (land-based) ecological diversity comes from 10% of the land. The same is true for our linguistic diversity, and it is the same 10%.

So, the first signal about an outbreak can be reported in any one of 1000s of languages, and concentric animated dots on a map aren’t going to help reach those people to help with either reporting or response.

We focused only on the detection problem, and used a combination of natural language processing and machine learning:

We implemented adaptive machine-learning models that could detect information about disease outbreaks in dozens of languages. When the machine-learning was ambiguous, microtaskers (crowdsourced workers) employed via CrowdFlower would correct the machine-output in their native language. Their output would then update the machine-learning models. As a result, we could pull in information from across the world and process it in near real-time, dynamically adapting to changing information (see the paper for more details).

One of the most promising outcomes was that we were ahead of any other organization in identifying outbreaks, including prominent solutions that used search engines and social media. This is also in contrast to many data collection methods favored by open-data-idealists.

CNN is faster than Social Media and Search Logs

A popular recent approach for tackling the problem of monitoring diseases, if not responding, has been via social media and search logs.

Search-log-based approaches like Google Flu Trends looking at the frequency of searches for terms like ‘fever’ and correlate them with clinical data. For the flagship case, Google Flu Trends was tracking a flu outbreak in the mid-Atlantic region of the US two weeks ahead of the Center for Disease Control (CDC).

In similar approaches, people are discovering and leveraging predictive terms on social media, Twitter in particular.

When we set out to test these new methods, we tried something that the previous systems had not: we looked to see if the information was already out there. It seems obvious, but neither Google Flu Trends or the Twitter research had compared their results with existing information that was freely available.

For the Google Flu Trends flagship case of the mid-Atlantic region, we found open reports by news agencies including CNN that pre-dated either by three weeks:

- “I’m Jacqui Jeras with today’s cold and flu report … across the mid-Atlantic states,

a little bit of an increase here” CNN, 3 weeks before Google flu Trends

With this new information, it looks like a common order of events for search-log-based approaches is something like this:

- 1. A flu-strain starts spreading through a region.

- 2. The increase is noticed first by people who observe large parts of the community (eg teachers)

and health care professionals. - 3. Information about the increase is spread informally through traditional and social media,

and more formally through official reporting channels. - 4. People are more worried as a result of knowing that the flu is spreading.

- 5. When early cold or flu symptoms appear, those more-worried people are more likely to search for their symptoms for fear that it is the worse of the two.

- 6. A signal appears via search-logs or via social media queries

We first reinterpreted these social signals in this way almost two years ago. Our interpretation was confirmed in a Nature publication earlier this year. The resulting interpretation is a more complex and interesting interaction of social and technological systems than the creators of Google Flu Trends first theorized, but ultimately a secondary signal.

(Two Caveats. One: in EpidmicIQ we never found a signal in social media that wasn’t already present in traditional online media, but this is a moving target and we didn’t parse all information from social media streams. Two: the methodology of the papers that I linked to above are solid and both fascinating to read, even if not necessarily presenting methods for early disease detection).

Push back against the open-data pedlars

For people who want to work in information management for social good and believe that open data is inherently good, Evgeny Morozov’s The Net Delusion: The Dark Side of Internet Freedom should be mandatory reading. The book is primarily based around the “Internet Freedom” speech that Hillary Clinton gave in 2010, which Morozov (and others) see as the most prominent recent speech regarding open data.

For much of the book, especially the chapter “Texting Like It’s 1989”, Morozov systemically debunks each of the assumptions about any inherent benefit to open data. Clinton’s speech used an example of mine, too:

- And on Monday, a seven-year-old girl and two women were pulled from the rubble of a collapsed supermarket by an American search-and-rescue team after they sent a text message calling for help.

This was the ultimate example before Clinton launched into the main body of her speech. As with the UN address, I was the source of this information, too. At the time, I was running a text-message-based emergency reporting system following a devastating earthquake in Haiti, supporting 1000s of Haitians. Clinton is quoting from a situation report that I had written the day before for the US State Department. We were attempting (and were ultimately successful) to get the US State Department to fund the initiative by employing people within Haiti. This initiative, Mission 4636, kept data private. The US State Department agreed to keep the system private by funding the employment of people within Haiti to process the emergency messages, rejecting competing applications for ‘open data’ initiatives (the majority of them non-Haitian) to take over the system.

Like the presentation to the UN General Assembly, my work in Haiti has also been misrepresented as an open initiative, albeit not by anybody of consequence. Like Weigend, people who weren’t involved are (still) publishing articles were they pretend it was an open initiative, republishing the personal details of many people within Haiti, children included. At least 90% of what has been published about ‘open data’ in the response to the Haiti earthquake has been taken from the handful of situation reports I wrote at the time. My reports at the time all clearly indicated the importance of privacy: as with the UN General Assembly meeting it is equally misleading and dangerous that people are presenting the information as a success in open data: it wrongly encourages people to post open data about at-risk individuals.

The outcome of all this?:

The two most prominent ‘data for good’ speeches in recent time falsely portrayed closed initiatives as open. That’s how weak the open data movement truly is.

I am (with no sarcasm) genuinely flattered that two initiatives I ran have been so prominent. I ran both while on leave from Stanford where I was completing a PhD focused on Natural Language Processing and Social Good. I’m saddened that the narratives of my work been hijacked by people looking for quick fixes. It is sadder still that the open data movement has so little to show for itself that it needs to edit my work, often mid-sentence, to scrape together its arguments. On the positive side, it helped me decide in order to have the greatest impact on the world, I needed to take the long view. That’s why I launched my company, Idibon, to tackle the problem of processing information at scale in any language.

Conclusions: Treat big data like rocket science

Many of the statistics that go into rocket science are also used in machine learning and scalable information management. The results are also as powerful: like the example I give in the UN General Assembly video, I could easily detect what kind of transport was taken by a potential Ebola victim. The ways that this information could be used to hurt this victim greatly outnumber the ways that I was in a position to help. The responsibility for managing sensitive data at this scale is immense.

The power of a rocket means that it needs to be regulated and access limited to professionals. The same is true for information management for social good. The data from global monitoring systems should not be open, but should be limited to professionals. I went into EpidemicIQ thinking that we might be able to release the system to be used by anyone to process data at scale. I came out of EpidemicIQ knowing that it is unethical to put these tools and data in the hands of the public well before we even appreciate their full potential.

Robert Munro

July 2013ps: on a personal note, many people have asked me why I rejected becoming a ‘social entrepreneur’ and instead became a real one. The decision came down to how I could have the greatest impact on the world over time. This post serves as a partial explanation.