Sudden onset translation

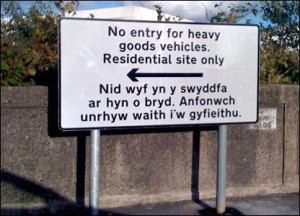

Complications of digital translation - the Welsh really translates as: 'I am not in the office at the moment. Send any work to be translated.'

Now add to that the fact I might not speak the same language. This might sound like an additional complication, but it is becoming less so. The number one ‘portal’ through which aid agencies interact with crisis-affected communities is still the window of the ubiquitous NGO Land Rover. Don’t speak the same language? Too bad. But the connected world makes real-time or near-time translation a much broader and more scalable possibility. We have already seen this happen – among the hardest places in the world to find Haitian Kreyol speakers in January of this year was at the headquarters of the earthquake relief effort at Port-au-Prince airport. Willing volunteer translators in Port-au-Prince were not permitted to join the aid workers in the compound for security concerns, and potentially important information went unread. Meanwhile, thousands of Haitians outside of the country were looking for ways to help.

By some estimates (well, mine) there are about 5,000 languages currently spoken by people in the connected world. The way we are talking to one another cross-linguistically is changing very quickly, as are the means for doing it.Machine-translation currently tends to employ a fairly shallow strategy, where algorithms learn the optimal replacements and reorderings of words/phrases from large histories of parallel data, rarely abstracted to anything we would call ‘meaning’ (although there are increasing small moves in this direction). Human translation is very much the opposite – translators have a deep knowledge of the source and target languages and the context of a given utterance, applying systematic methods for translation and review. These are both somewhat separate to informal everyday translations given on-the-fly between people speaking different languages in everyday interactions.

But there is a new kid on the block that is encroaching on this: crowdsourced translation. It is broadly defined, but typically involves online non-professional translation. This ranges from the free translation of the FaceBook platform by its users to low-cost translation by people working on crowdsourcing/microtasking platforms like MTurk. For machine translation this has been a successful partnership – crowdsourced translations can create novel sets of parallel data (see Vamshi Ambati, Stephan Vogel and Jaime Carbonell’s ‘Active Learning and Crowd-Sourcing for Machine Translation’ and Chris Callison-Burch’s ‘Fast, Cheap, and Creative: Evaluating Translation Quality Using Amazon’s Mechanical Turk’). For professional translators, I recently heard Jost Zetzsche characterize crowdsourcing as the straw the broke the camel’s back: after years of losing potential work to machine translation, along comes the ‘crowd’ who will also translate for relatively little or no cost (abstract forZetzsche’s talk at ‘Crowdsourcing and the professional translator’).

The leaders in machine translation, traditional translation and crowdsourced translation all sat down together for the first time just a few weeks ago at the AMTA 2010 Workshop – Collaborative Translation: technology, crowdsourcing, and the translator perspective (with thanks to the forsight of Alain Désilets for organizing this and getting everyone in the same room!) The professional translators were rightly worried that they would lose work to crowdsourcing. With great tact I told the president and president-elect of the ATA ‘you are already the crowd’. I needed to quantify that then, too. What I meant was that crowdsourcing for translation is simply an online service, and from a service requester’s perspective making a request from a crowdsourcing platform is not necessarily so different from making translation requests from professional translators online. Professional translators are well-placed to share platforms with crowdsourced translators. The translation start-up myGengo is a good example of this multi-tiered approach, where there are different pay rates for different qualities of translation. Pro tip for crashing translator parties: professional translators, when meeting for the first time will ask “what is your pair?”, meaning what language pair do you translate to/from.Because of the changing nature of how we interact with language, professional translators will inevitably see their industry change. But they can also see it grow. We are translating documents today that we never considered translating previously. Even Twitter recently announced that Twitter trends will now be multilingual through auto-translation. Who ever thought about translating their passing thoughts just a few years ago? The more the connected community expects translation, the greater the potential that some of the translations will require professionals. It is easy to imagine this even within single documents: professional translation for headings and introductions, crowdsourced translation for the body text, and machine-translation for cited/related works. There may well be a net gain in work for professional translators.

There was one point of agreement between all the attendees at the workshop: for sudden onset crises crowdsourced translation is essential. In the event of a sudden onset crisis, people will immediately begin using their communication technologies (and their languages) to report their situations, request help, and seek out loved ones. Under-resourced languages are underrepresented in the professional translation and machine translation communities (by definition) and some 99% of these 5000 languages are under-resourced. I presented briefly on this (Crowdsourced translation for emergency response in Haiti: the global collaboration of local knowledge) and there were no arguments against this strategy, just about the correct quality control methods and platforms to use. In our case we began using open-source software but quickly moved to CrowdFlower as the host for robust quality control and reliability. Most people at this workshop had similarly moved to commercially developed platforms, although often with custom wrappers. It seems the core of microtasking has gone the way of server hosting and search: a task for which you use large-scale industry solutions. The exception was the continued use of the specialized but flexible Pootle translation software – an open source collaboration for translation with broad use among academia, volunteer and commercial translation industries.(As an aside, it was great to even have this discussion with the leaders of the field: there are not many mainstream venues that actively seek out a humanitarian component, but I have seen humanitarian sessions at every crowdsourcing/microtasking conference/workshop in the past year! This particular workshop also included Naomi Baer who runs Kiva’s Translation Platform and Will Lewis and Vikram Dendi who launched a Haitian Kreyol machine-translation in impressive time. Despite there only being a few dozen people it felt like the kernel of a new global strategy for translation).

There is a place for machine-translation, crowdsourced translation and professional translation in humanitarian work. What machine-translation lacks in precision it gains in scale. It can also expand the potential worker community: even if translations are not reliable, they may be accurate enough to allow topic-identification and prioritization. Last month I worked on machine-translation for a UN OCHA earthquake simulation in Colombia as part of an evaluation of the recently formed Standby Taskforce. As part of the exercise over several days we tested the processing and structuring of information from emergency text messages in the wake of an earthquake in Bogota. For this I created a new auto-plugin for the Ushahidi crisis map that attempts to identify the language of an incoming message and translate it into given target languages via Google and Bing’s translation services. This was a first pass at translation, in negligible time, so that responders and information managers of any language can all understand the messages as quickly as possible. The translations were then corrected by crowdsourced volunteers. It is up to the independent observers to make the final conclusions about the potential success of the simulation as a whole, but for the translation part I was monitoring I can report success for English-only speakers in identifying priority messages using the machine-translations – an automated expansion of our value-adding workforce beyond the language of the crisis-affected community. In many ways this was historic: we combined machine-translation and crowdsourced translation seamlessly into a UN crisis response system. As far as I know it is probably the first time that artificial intelligence and crowdsourcing have been incorporated into the United Nations information management systems. It was extremely informative to be able to do this in the context of a simulation. (With special thanks to Anahi Ayala Iacucci for coordinating the workers, George Chamales for customizing the Ushahidi platform, Helena Puig Larrauri for working with the translation and analysis teams, Jaroslav Valůch for his watchful monitoring and everybody who responded to our open call for volunteers!)Despite this small step for machine-kind, it seems that automated translation was the least accurate on identifying location names. This feedback was from Marta Poblet, one of the translators:

- I realized while checking the automated translation that it would translate as proper names words that were not (for example, the past tense of “fall” (“cayó”, or “cayo” usually without the accent in SMS) was translated as Gaius (a Latin jurist?) and conversely, names of neighborhoods such as Salitre or Puerta al Llano were not recognized as such and unnecessarily being translated.

This is clearly problematic. Identifying locations is one of the most important tasks for structuring emergency messages so this component would require an initial manual translation in many cases or mapping by a native speaker. While this still creates a potential bottleneck by requiring bilingual speakers at at least one early step in the process, early work in ‘monolingual translation’ indicates that the future of translation may be able to circumvent this (see Chang Hu, Benjamin B. Bederson and Philip Resnik’s ‘Translation by Iterative Collaboration between Monolingual Users’), or in named entity recognition for multilingual SMS, but such techniques are in their infancy.

Finally, when combining machine-translation with expert correction it is hard to go past Meedan, an Arabic/English bilingual news sharing site. Users share links/comments in either language which are then translated by machine and corrected by professionals. From my interactions with Ed Bice and Chris Blow it is clear they are both thought leaders in how cross-lingual mass communication is possible in digital world. If you want to understand the complications and importance of translation, there is no better place to start than with an Arabic-English article on their site.

The future of how we talk to each other is changing and even crowdsourced translation looks nothing now like it did 12 months ago. Who knows how it will look in just a few more years?

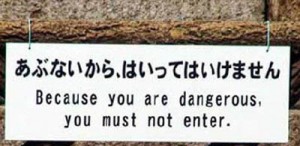

(ps: In lieu of solid conclusions I can offer what you really want: more bad translation photos)

Rob, Dec 6 2010